XML sitemaps & Search Engine Optimisation

If you’re seeking to optimise your website and to improve search engine visibility, you may have come across XML sitemaps.

There’s a lot of information out there about XML sitemap guidelines and SEO, but most of it is scattered across the web. Google themselves have published several different blog posts, videos, and FAQ articles regarding the different guidelines to follow when trying to optimise your sitemaps, but unfortunately you’ll have to look around these different posts and articles to gather all of the important information yourself.

Luckily, I’ll be talking in detail about some of the most important guidelines you’ll need to keep in mind when creating, verifying, and submitting your sitemap in this article.

What Is An XML Sitemap?

Let’s start with the fundamental information, and define what a sitemap is.

An XML sitemap is a list of the URLs contained on your website, marked up in the Extensible Markup Language (XML), which can be easily read by search engines and crawlers. The use of sitemaps is important because it allows you as a webmaster to inform search engines of all the URLs you’d like to have crawled, and to let them know about new content that’s been added to your website – as well as the relationship that the listed URLs have with other URLs in your website.

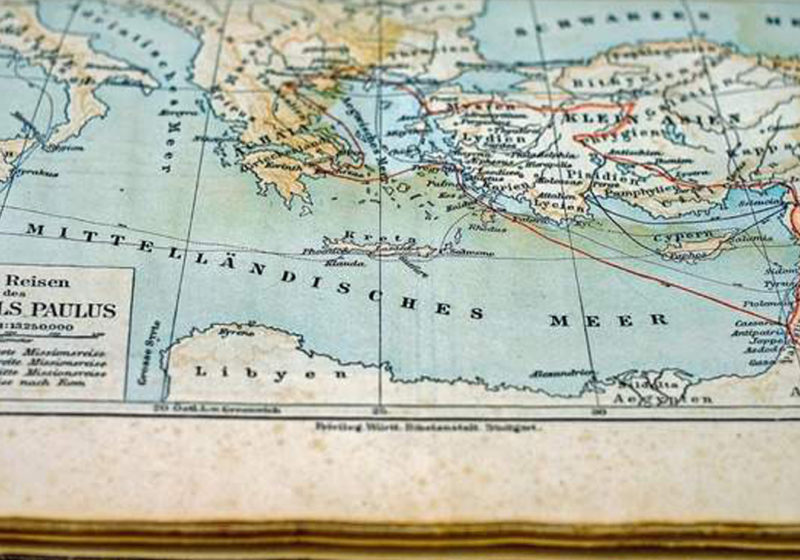

Think of it as an actual map that search engines can use to understand how to navigate your website.

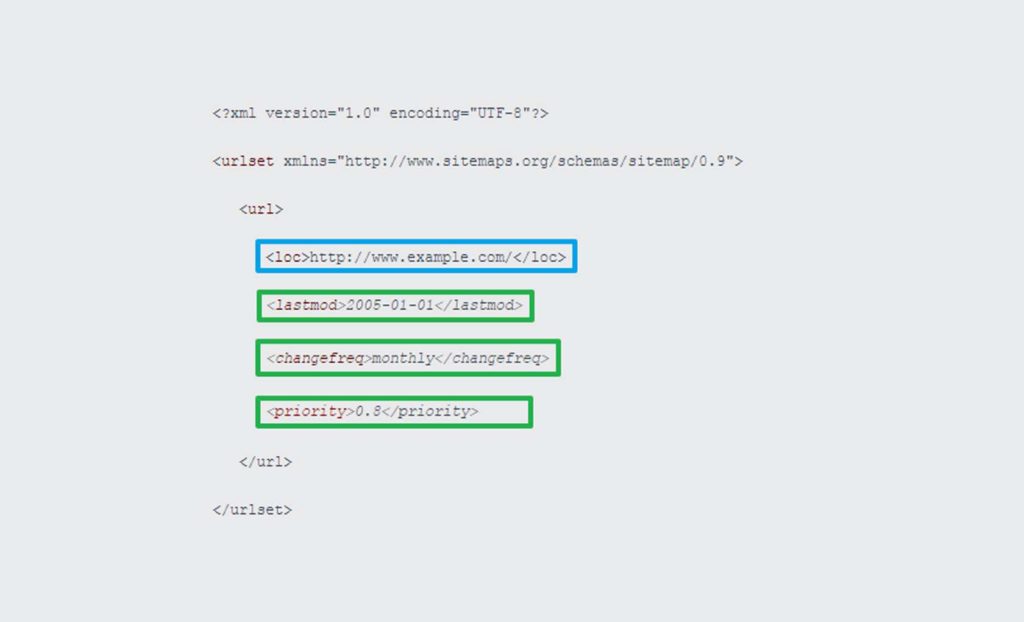

This XML file is then uploaded to your website and is often, but not always, situated in a location such as www.example.com/sitemap.xml. Below is an example of what a simple sitemap looks like:

The above XML sitemap is composed of only one URL, https://www.example.com/. The items highlighted in green are the different attributes of the URL that precedes them.

Here’s a quick explanation of what these attributes do:

- lastmoddates the last time the page was modified (it uses the standard W3C date format)

- changefreqis the frequency with which the page is likely to change

- priorityassigns a numerical ‘weight’ to the page that measures its importance

The SEO importance of these items will be discussed in the next section.

So far, XML sitemaps seem simple enough, but issues can arise when you have a very large domain or a site that updates its content frequently. If your website has 200,000 pages that are likely to change monthly, it would be impractical to write and edit your own sitemap.

Thankfully most content management systems out there have a series of plugins or scripts that will automate this process. The free ones will usually have a URL cap, but there a range of paid options that are more comprehensive. The good thing about these is that they will automate the process of keeping your sitemap up to date.

The important thing is to understand how to configure these scripts to make sure that your sitemap is in-line with the guidelines required by search engines.

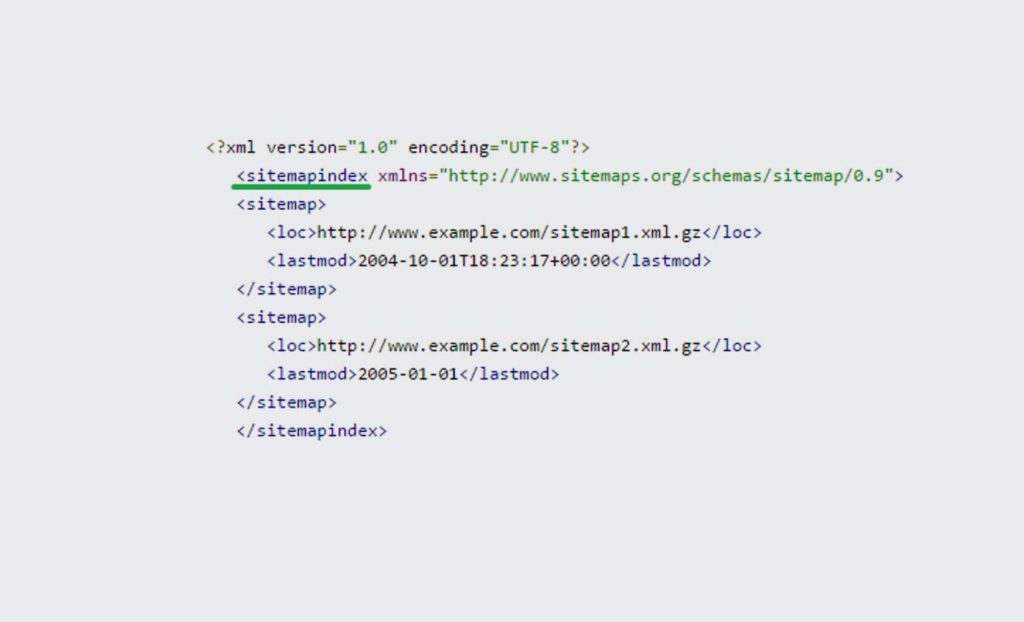

Another very important note on XML sitemap structure is that you can only include 50,000 URLs (and a file size of 10MB, uncompressed) per sitemap. For anything larger you will need to split your sitemap across several different files and use a sitemap index file. This index will then list all of the different sitemap files. This is common practice for large eCommerce websites. Here’s an example of a sitemap index:

What Does An XML Sitemap Do For SEO?

Now that we understand what a basic sitemap looks like, let’s delve into what it does for your SEO.

Let’s assume that you’ve managed to collect all the URLs you want Google to have a look at into an XML sitemap – but what next? Does it mean that once you upload this sitemap search engines will automatically index the pages in it?

Unfortunately, the simple answer is no. There is no guarantee that listing a URL on your sitemap will grant it a spot in the SERPs. Sitemaps are only used as an indication to search engines of the URLs you think are important in your website. It also shows the structure of your website to search engines, so that they may conduct a more informed crawl of your website.

For example, if you have a very active website with different kinds of content that will change frequently, then having an up to date sitemap will pro-actively inform search engines of these changes.

To be able to do this, your sitemap URLs must have the lastmodattribute for each URL. This is a mandatory guideline that Google set out and the only URL attribute (out of the ones showed previously) it looks at when crawling the URLs in the sitemap.

Although the frequency change and priority might seem important to you, Google only wants you to let them know when a specific page was last modified. In fact, Google also says that it’s important to pair up an Atom/RSS Feed alongside a proper sitemap to have the best SEO performance.

XML Sitemap Tips

Having been explained the fundamentals of what a sitemap is, and how it helps your SEO, let’s move onto the guidelines and tips. Make sure you read through them; not following some of these guidelines might risk search engines disregarding the sitemap altogether, and we don’t want that

1. Use Clean Sitemaps

One of the first things we do when auditing sitemaps is crawl them to see if the URLs used are clean. I use this term in the same way Bing does in its guidelines: you shouldn’t list URLs of duplicate pages or URLs that return status codes that aren’t 200-OK.

If you have duplicates of the same URL, make sure you only list the canonical URL you use on your website.

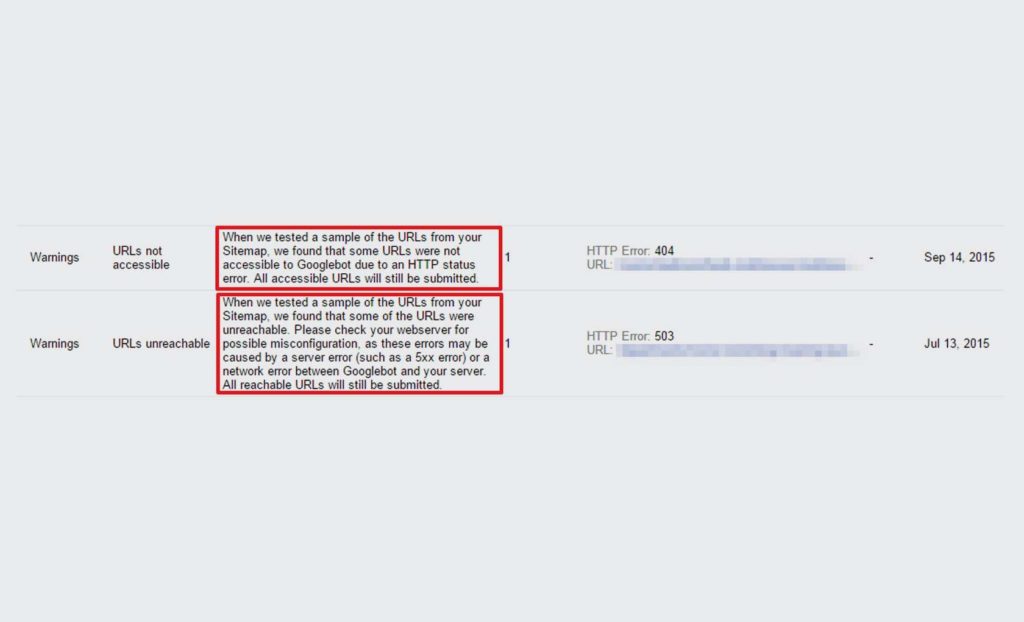

Make sure that all URLs used return a 200-OK status code. Google will treat any other status code as an error when you submit your sitemap (more on this later). If your website uses server redirects (3xx Status Codes) make sure you list the target URL in the sitemap.

2. Other URLs To Exclude

As we stated in the first point, sitemaps are required to be clean, and this is also true for those URLs that are excluded via Robots.txt file. After all, you would be advising search engines to crawl and index specific pages that you’d also like blocked from being indexed. This sends a mixed message, which Bing and Google don’t like.

Similar to the canonical URL being used, do not list print and email versions of URLs, secure eCommerce pages (such as login pages), or pages excluded with the robots noindex meta tag.

3. Domains, Protocols & XML Sitemaps

This is a common point on which webmasters get stuck: what to do if you have a website with different sub-domains, and some pages with an HTTPS protocol?

The easiest way to remember this is to always think that an XML sitemap must use the same sub-domain and HTTP protocol as the URLs included in it.

If your site has different sub-domains, the URLs on shop.example.com shouldn’t be located in the sitemap of www.example.com.

Regarding the protocol, it means that a sitemap that has http URLs should be found at https://www.example.com/sitemap. If your site has a mix of protocols, make sure you keep this in mind: don’t list URLs that don’t use the same protocol as the XML sitemap.

If you want to list HTTPS URLs, you will have to create a specific HTTPS XML sitemap.

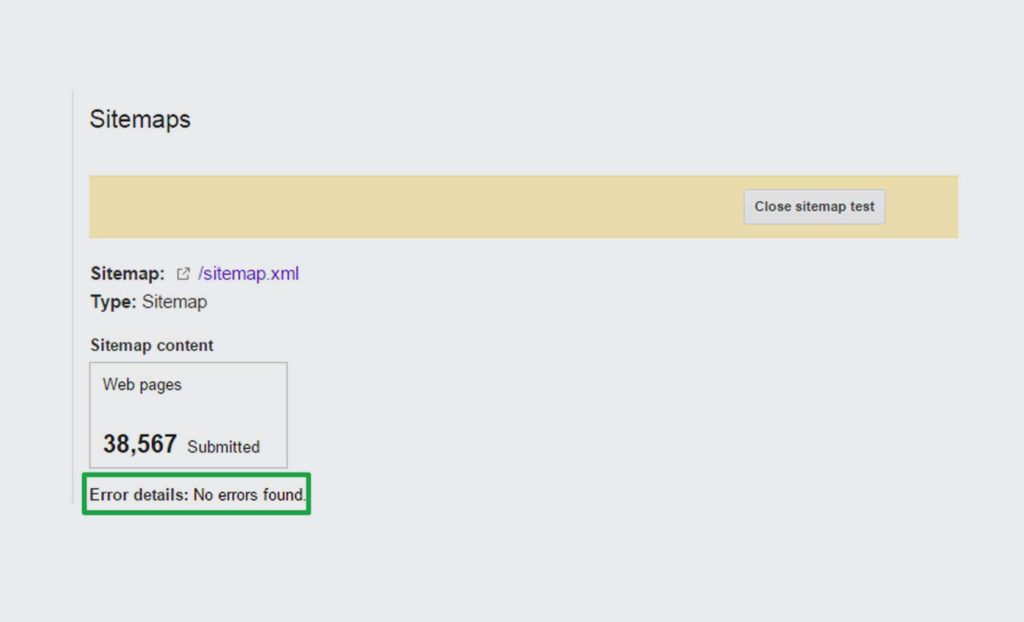

4. Test & Submit Your XML Sitemaps

Once you have your sitemap ready, you’ll need to test it and submit it to search engines. The easiest way of doing this is going on your Google Search Console, and testing it there.

Make sure you follow this step, as it will ensure you’ve followed all of the important standards. Once you’ve got confidence in your sitemap, you can submit it to search engines in the following ways:

- Google Search Console/ Bing Webmaster Tools: this is the fastest way to assure you make search engines aware of your new sitemap. It will also allow you to track the number of indexed URLs that were submitted along with other nice features.

- List the sitemap URL in your Robots.txt. This step is fairly straightforward, as you just need to place the sitemap URL in the robots.txt file. Here’s an example of how to write the URL in the file:

Sitemap: https://www.example.com/sitemap.xml

Make sure to submit the XML sitemap using all of the above methods for maximum visibility.

5. Use Atom/RSS Feeds To Inform Search Engines Of Changes

It’s likely that in the near future we will post a more in-depth article explaining what these feeds are, what they do and how to set them up. For now, all webmasters should be looking for is have one of these feeds set up.

While XML sitemaps are a large lists of all the URLs you’d want crawled on your site, RSS/Atom feeds only contain the latest added and recently updated URLs, and are much smaller than sitemaps.

Google themselves have said that sitemaps are downloaded and crawled less often than these feeds, which provide search engines with continuous up to date information about your website. These can be used to get more exposure within search engines, and can be especially beneficial to large websites with very frequently added or updated content.

Other XML Sitemap Features

Sitemaps can provide a varied array of extra information to search engines. I won’t go into much detail around some of these here, as they each could merit their own blog post, but there are some features that are definitely worth mentioning.

One of the nicest features of XML Sitemaps is the ability to tag additional media type URLs with specific extensions:

These extensions allow for any kind of content to be properly highlighted to search engines, giving them more information regarding the kind of content your website handles.

Final Thoughts

All in all, XML sitemaps are a very powerful tool that make search engines aware of your website and the rate at which it’s updated. This is why we advise webmasters to ensure that their XML sitemaps are optimised, and that you try to extend the way you inform search engines as much as possible.

They might seem like a daunting item to create and maintain, but with the right tools and guidelines it’s possible to do this easily. Hopefully, this article will make your life far simpler when dealing with XML sitemaps.

Own your marketing data & simplify your tech stack.

Have you read?

I have worked in SEO for 12+ years and I’ve seen the landscape shift a dozen times over. But the rollout of generative search engines (GSEs) feels like the biggest...

As you will have likely seen, last week Google released the March 2024 Core Algorithm Update. With it, comes a host of changes aiming to improve the quality of ranking...

After a year of seemingly constant Google core updates and the increasingly widespread usage of AI, the SEO landscape is changing more quickly than ever. With this rapid pace of...