Why Attribution is a Keystone Technology for Marketers

As a digital marketer with over 15 years of experience under my belt, attribution has always been part of the mix.

But recently, it’s moved to the periphery as client demand focussed on more day-to-day concerns around improving performance in a particular campaign, for a launch, new in-channel optimisation efforts, or cross-channel campaigns with siloed measurements of success.

The last five years have changed that priority radically for me – and I believe for all other marketers worldwide.

Simply put: the rise of AdTech silos, their auction-based ad pricing systems, and the near-complete digital coverage of all consumers an advertiser would want to target has meant that across the board media spends have begun to ‘spin the wheels’: they return higher overall Cost Per Acquisitions (CPAs) when considered collectively than they used to – and that’s a serious problem for growth.

Marketers live in a world of maximising growth. Our key levers are reducing cost to allow spend to be re-allocated, and identifying where growth can most easily – or cheaply – be attained.

As recently as two to three years ago, it was likely that any customer coming to QueryClick looking for performance optimisation would have, in addition to tactical optimisation opportunities, the opportunity to spend more in an underused paid media channel – and 99% of the time that would be social spend, mainly on Facebook.

However, today, most marketers have maxed out their media spend on social media, leaving little room for optimisation beyond tactical, in-channel improvement.

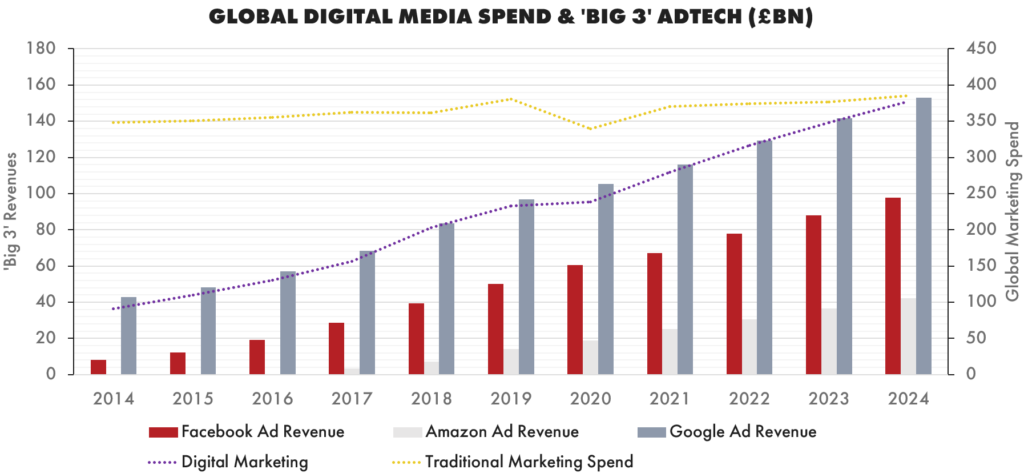

Of course, there remains a lot of value from in-channel tactical optimisation, but major strategic opportunity derived from reallocating media spends into under-utilised channels is rarely an option these days. When we look at global ad spend compared to revenues generated by the big three AdTech providers – Google, Facebook, and Amazon (as shown in the graph below) – this overall ‘catching up’ of Facebook to Google, which remains dominated by its Paid Search media revenues, is easy to appreciate, with £27bn more Facebook spend coming into the mix.

Other social platforms are an options, of course, but they are essentially targeting the same broad customer base at more or less the same stage in their conversion path – early, before moving into a consideration stage where they would more consciously consider their purchase and become more active in the main digital channel: search.

What does this mean for marketers today?

What this means is that, while businesses may have mixed optimisation performance for their marketing activities, it has always been possible for poorly managed advertisers to spend their way out of trouble by activating new online media spend ‘green fields’ with lower overall CPAs due to low overall volumes of media spend – and social media has been that green field for the last five years.

Now, however, there are no new green fields. Just a straight optimisation bunfight with an advertiser’s competitors. And that is making many marketers sweat.

Enter attribution.

The (Failed) Promise of Attribution

All marketers are aware of attribution – the idea that how we measure performance should take into account the entirety of a customer’s experience of our marketing activity, rather than just that particular ad, or organic engagement. And most marketers will have applied attribution is some form when looking to capture this wider view – typically a multitouch model, or perhaps even a more sophisticated data driven model.

The fly in the ointment here is that those of us who have implemented these approaches quite quickly realise that they don’t help with decision-making at all – because they change over time, they don’t match up to cash in bank, and most crucially when we take action using these approaches we don’t get the performance change we expect. They are unpredictable.

In desperation, some marketers use a first and last click view to report on a campaign’s apparent influence on sales when it is the first thing a customer sees or the last thing before conversion – the thinking being that a marketer can use their judgement here to assess if a campaign is working to a criteria of either awareness raising or contributing to conversion.

Sometimes, we will even embrace the idea that ‘brand’ campaigns should not be measured as we just can’t be sure how much influence they have on conversion so why bother?

These aren’t just my or my team’s experiences – these are the experiences of 90% of 200 marketers responding to a Censuswide survey in 2018 we co-sponsored, and a similar rate from an updated survey run in 2021 which also showed that 80% of respondents flat out don’t trust the reporting they get from their AdTech.

So, what’s going on here – why is attribution failing marketers?

Building a Picture of a Person

At its core, the challenge of attribution is the essential fact that when you are trying to weigh the influence of each interaction with your marketing activity, you need to weigh that interaction against an individual. Not a device, or a household – an individual.

Setting aside the very important topic of data privacy for us to return to later: it is not possible today, and nor will it ever be, to directly measure every possible interaction someone has with our marketing activity.

Traditional marketers understand that challenge, and use other methodologies – statistical measurement, media mix analysis, panel data, and econometrics to report on marketing effectiveness.

In digital, we maintain a belief that because we are generating large volumes of data, we somehow should be able to measure and associate everything back to an individual.

However, this confidence is misplaced due to so little attention being paid to the self-evident truth that we do not access digital content directly as individuals – we use multiple applications, on discrete devices.

When it comes to attribution, data is sand, not oil.

The primary method of measurement across application and across devices, is to match IDs together for these different environments – essentially matching multiple IDs together to associate the activities of an individual across different applications, and across the websites they visit using the applications. Those IDs are assumed to be accurate. They are not.

The largest problem for direct measurement is the use of the cookie. Not the cookie usage that currently is dominating headlines for marketers – the 3rd party cookie – a cookie set by a website other than the one we are on – but the first party cookie, a cookie set by the website we are visiting and the method of measurement for 97% of all web data globally.

The False Promise of the 1st Party Cookie

All web analytics systems, with the exception of ‘log file’ analytics, use 1st party cookies to ‘stitch’ together multiple hits – the loading of a 1st party pixel – as a web browser interacts with a website.

This is achieved by:

- Checking to see if a site cookie already exists using JavaScript

- If not, creating a unique ID stored in a new cookie which future visits to the website can be associated with

- This ID is then passed into the URL path of the 1st party pixel along with all the other rich information that is created and used in web analytics systems.

This process has existed and been used to report on web performance since digital marketing began in order to ‘prove’ the impact of media spend on sales.

By the time someone transacts, they will have triggered a great number of ‘hits’ and will certainly have an associated ID.

When you look at a ‘Last Click’ report for digital marketing, you’ll see this transaction reported and lots of detail in this measurement system will match up with reality:

- The actual transaction value and any associated tax detail with cash in bank

- The timestamp of the moment of transaction; the URL used to conduct the transaction itself

- An ID for the transactor – as they will have completed payment using a secure payment system which has verified their identity.

But as soon as you begin to look back in time from that transaction, the accuracy of the data degrades – quickly. This is because it relies on the cookie ‘stitching’ hits together against that transactor’s ID to tell that story.

In fact when you inspect that raw sequence of hits – the ‘Clickstream’ of a website, you will find that on average only about 20% of all data will be a complete and correct record that maps back to a transaction with no inaccuracy or incompleteness – and an overwhelming 80% of it represents partial or incomplete data about the actual individual behind the devices, applications, and sessions being measured.

For the purpose of this article, it is important to understand how we are able to measure the completeness of 1st party cookie accuracy. And in that we have to embrace the application of modern data science, Machine Learning (ML) and AI.

The Power of Predictive Models and AI

Advances in cloud computing meant that even back in 2012, when QueryClick first began investigating the accuracy of our client’s digital marketing data and attempting to join it to offline data such as store footfall and transactions, we were able to work with the UK’s largest retailer by revenue – Tesco – and a number of other high street retailers without immediately racking up exorbitant bills that would have ended any attempt to process the huge volumes of data generated by websites.

We were investigating if we could use new techniques developed in ML to make a statistical join into those siloed, offline datasets from our rich online data.

That was our entry point into learning about the fundamental unreliability of our clients’ digital data.

Fast forward seven years… and not only have we developed new and proprietary methods to replace the role of the cookie in data collection online – using probabilistic ML to ‘stitch’ together multiple hits based on the probability that they are a continuation of a previous hit – we also now have been able to successfully develop a completely new approach to attribution using advanced AI that allows us to generate a contribution value for each and every interaction in that joined up dataset – and in the process allow de-siloing of other datasets, even those of so-called ‘Walled Gardens’ such as Facebook, Google, and Amazon.

Essentially, we have created a new form of unified marketing analytics: one which uses probabilistic modelling at its heart, and does away with the cookie entirely.

What does this mean?

Well, instead of needing an ID to be passed through into the system to allow individuals to be tracked across different data silos, we can instead simply feed the system the siloed data and that joining is done probabilistically, eliminating the need for any identifiers.

The system we developed generates its own identifier for an individual – and does so compliantly – and functions to a very high predictive capability: over 95% typically. Meaning it is several orders of magnitude more accurate than the old cookie-based analytics systems, and can function across all data sets supplied to it as long as they have some level of timestamp data to join along.

This is a radically new approach to measurement that steps away from the old, discredited idea that direct ID capture is required to collect and associate data with an individual, and importantly also enables to joining together of digital data sets with traditional.

In order to satisfy data privacy and compliance laws, and to ensure we could bring the technology to the wider marketing world outside of QueryClick’s customer base, we have spent the last five years – and several million pounds! – building a single system that operates entirely in Azure, at scale, which is deployable for all customer types imaginable – from the very largest enterprise customers, to the smallest transacting website just beginning its growth journey.

All we ask is a minimum of 25 transactions a day, and a 1st party deployed pixel. The rest is managed by the system.

We call it Corvidae, and after launching it to market in 2021, we are very excited to share it with the world and help marketers build the new measurement system for the modern marketer.

Discover Corvidae

For attribution that works.

Own your marketing data & simplify your tech stack.

Have you read?

I have worked in SEO for 12+ years and I’ve seen the landscape shift a dozen times over. But the rollout of generative search engines (GSEs) feels like the biggest...

As you will have likely seen, last week Google released the March 2024 Core Algorithm Update. With it, comes a host of changes aiming to improve the quality of ranking...

After a year of seemingly constant Google core updates and the increasingly widespread usage of AI, the SEO landscape is changing more quickly than ever. With this rapid pace of...